Love affair with CBIR (Content base image retrieval system) Part 4

Update:

I never figure out, this would be series of article, here are links to rest of it

Love affair with CBIR – Part 1

Love affair with CBIR – Part 2

Love affair with CBIR – Part 3

Love affair with CBIR – Part 4

Also, visit my site>>> https://itawindows.com

(The one you are reading)

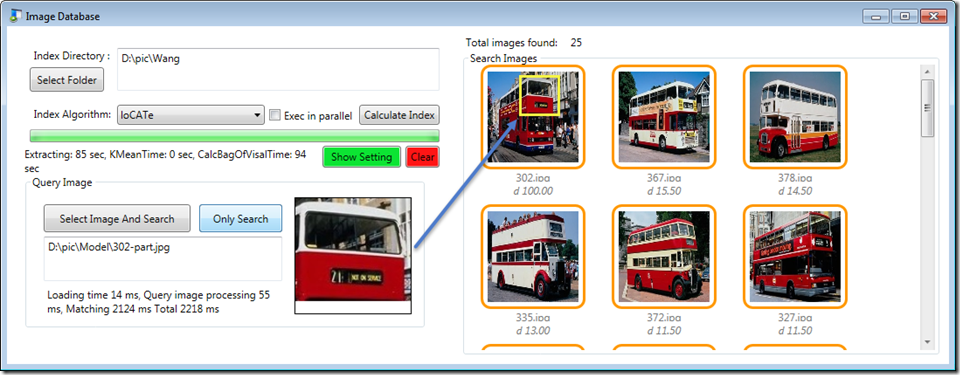

In this article, I will walkthru the sample application, which I have created to demonstrate the working of CBIR. This is continuation of the series of article, so, if you are not able to catch with this post, read the previous ones to understand the context.

Last article, you have probably read about the theory involve in finding the similar image. But, you won’t get it, till you see the working code. Same was my frustration, when I was doing the research for the CBIR stuff. I find tons of papers from various universities around the world. But, it was hard to find working demo, especially in .NET I googled for days, found blogs, article, but most of it doesn’t share implementation code. Most of the Computer Vision stuff happens in C++\Matlab\Phyton. Very few taker for .NET languages.

That’s where I decided to help the poor souls like me, who are searching for CBIR implementation in .NET would get benefited with this proof of concept application. Especially, college student’s who are trying to learn C# language, .NET framework and trying to built CBIR all at same time. This would be boon from them, like the oasis in the desert of internet

I would be showing you demo on the Wang image dataset. You can download 1000 test images, which contain 10 sets of 100 images each. This will help you understand how reverse image search works, and how efficient the algorithm is, in getting the similar image.

You can download Image Database application from https://github.com/sbrakl/ImageDatabase

License

Note: This application which I have build is under GPLv3 license, but the libraries it (EMGU CV, Accord Framework, etc.) aren’t under same license. If you need to use it in commercial application, I request you to check the license of individual libraries used in this application, and use it appropriately.

This utility is been developed in WPF, .NET framework 4.5 as the proof of concept for various image recognition algorithm.

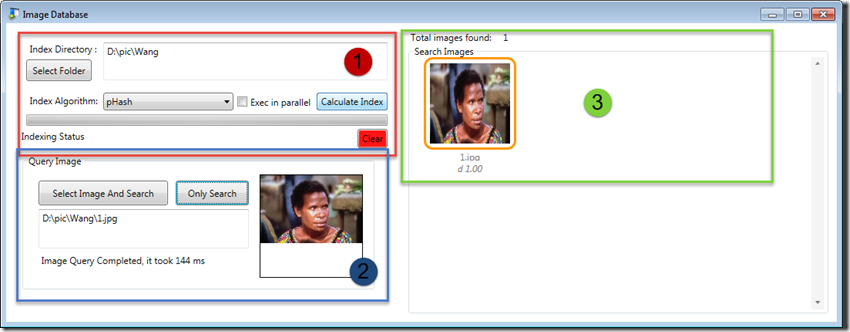

This utility consists of three areas

1 – This Is the index section, before you query the image with your favorite algorithm, you need to index the image directory for that algorithm. Select the Image directory which contains all the image, and click ‘Calculate Index’. Note, Image directory must contain all images at the root of the folder, if the images are nested with sub-directories, it won’t take it for index.

This process of index calculation need be done once for selected folder, against which you can query multiple times. With this utility, you can only search the images, which it has been index. It means, if the image is missed in indexing, if won’t be queried either.

2 – This is query image section. Select the image and search. ‘Only Search’ is for comparison of same query image against multiple algorithms. Just change the algorithm from dropdown and click ‘Only Search’, if you need to query the same image for new selected algorithm.

3- This is the result area, where visual similar images to one been queried would displayed here.

Let analyze each algorithm in details, starting with Binary Algorithms,

Binary Algorithms

Binary algorithms are good in finding de-duplication, but can’t help with similar images search. You can read more about this in Part2 of this series.

-

Perceptual Hash

pHash has been implement using the SimilarImageDotNet library, which has been written by ‘Carlos Ballesteros Velasco’ . It compute the hash into string.

1: using (Bitmap bmp = new Bitmap(Image.FromFile(fi.FullName)))

2: {

3: compressHash = SimilarImage.GetCompressedImageHashAsString(bmp);

4: }

You can compare two images by

1: var dist = SimilarImage.CompareHashes(queryImageCompressHash, imgInfo.CompressHash);

Distance range between 0 and 1, where 0 mean complete different, and 1 mean complete identical.

-

RBG Histogram

RBG uses the color histogram for compare. I have use the Eye.Open similar image finder library. It convert the image into histogram of RBG color (two dimension array). During compare, it calculate the mean square distance between two histogram (two dimension array) and gives the distance.

1: queryProjections = new RgbProjections(ImageUtility.GetRgbProjections(bitmap));

2: var dist = imgInfo.RGBProjection.CalculateSimilarity(queryProjections);

-

Bhattacharyya histogram algorithm

In the second post, I had describe about the ‘Jakob XnaFan Krarup’ implementation on the code project. It resize the image to 16×16 size, convert the image into grayscale, and get the R channel to calculate the normalize histogram (double type jagged array) of image. Then, it compare normalize histogram using Bhattacharyya coefficient distance formal for get the distance. Here, in my implementation, I am convert the difference into percentage difference, 0% different mean, it exact same identical image, 100% difference means completely different image. 30% mean, query image is 30% different from search image. Lesser the number, better is result for this algorithm

1: queryHistogram = BhattacharyyaCompare.Bhattacharyya.CalculateNormalizedHistogram(img);

2: var dist = BhattacharyyaCompare.Bhattacharyya.CompareHistogramPercDiff(queryHistogram, norHist);

These were the binary algorithm, now time for global descriptors

Global Descriptors

-

CEDD

Global descriptors are signature, which describes image from whole. It differs from the binary algorithm, as it consider color, edges, texture to describe the image, rather than some simple projection of binary algorithm. It been create by Savvas A. Chatzichristofis, and available on his website http://chatzichristofis.info/?page_id=15

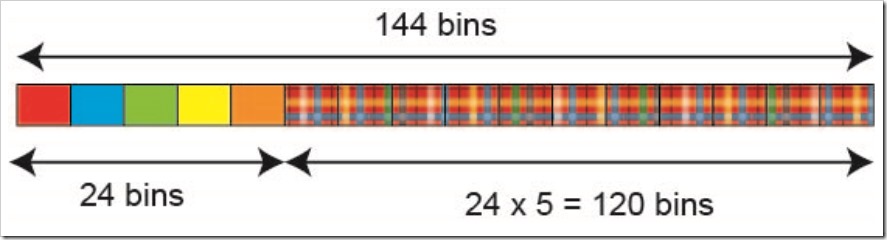

CEDD (Color and Edge Directivity Descriptor) descriptor consists of 144 bins (double array of 144 length), where first 24 bins represents the 24 shades of color used by the system, and rest 5 set of 24 bins represent the texture unit. This set classifies image in the texture area. You can download the code to calculate CEDD descriptor from Savvas site.

You can get the CEDD descriptor from the bitmap from the following code

1: double[] queryCeddDiscriptor;

2: using (Bitmap bmp = new Bitmap(Image.FromFile(queryImagePath)))

3: {

4: queryCeddDiscriptor = cedd.Apply(bmp);

5: }

It compare the two descriptors using the Tanimoto Coefficient, which can be compare by as follows

1: var dist = CEDD_Descriptor.CEDD.Compare(queryCeddDiscriptor, ceddDiscriptor);

During my experimentation, CEDD give much better results compare to binary algorithm, as you can see below. When you search for Bus, it brings all remain Bus images from the database.

Notice the green button, ‘Show/Hide Setting’ appear, when you select CEDD. When, I was developing CEDD, I was hypothetical problem, say I have million images. In linear approach, it needs to compare each and every million descriptors before getting the right ones. This would be slow. But, I have the hint from the RDMS indexes, that database do not compare each row in table, they built index around it. MS SQL Server built binary tree cluster/non-cluster index for particular column, hence query is blazing fast.

Same concept, I had applied here. I have create CEDD BK-Tree index. Since, compare function of two descriptors is not simple as for string or integers, I need to get tanimoto distance. Idea is to arrange the descriptors in such a fashion, that similar one, once having lower distance value to each other are place near each other. When compare, I need to compare just fraction of total descriptor (10% of million in our case) to get the near about values. Search down the tree is n(log n) times faster, where n is total number of descriptors. Can’t understand a word of this paragraph, in short and plain English, search is blazing fast using BK-Tree index.

Note: You need to index for each algorithm type, linear and bk-tree. Select Linear and click ‘Calculate Index’ will calculate linear CEDD index, and Select BK-Tree and click ‘Calculate Index’ will calculate BK-Tree index.

Vectors Descriptors

-

SURF

I did mention about the SURF image descriptors in the Part 3 of these series. Here, I am using the Emgu CV implementation. Surf algorithm runs in two parts, first it detects keypoints and second, it calculate descriptors for those keypoints.

1: //Initializing the SURF Detector

2: double hessianThresh = 500;

3: SURFDetector surfDectector = new SURFDetector(hessianThresh, false);

4:

5: //Calculating the Keypoints and descriptor together

6: using (Image<Gray, byte> observerImage = new Image<Gray, byte>(strImagePath))

7: {

8: VectorOfKeyPoint observerKeyPoints = new VectorOfKeyPoint();

9: Matrix<float> observerDescriptor = surfDectector.DetectAndCompute(observerImage, null, observerKeyPoints);

10: }

In this POC application, use can define Surf Settings

Threshold is hessain threshold use in initializing the SURF Detectors, Uniqueness threshold is the distance in computing the similar descriptors and good match is number, where atleast ‘x’ number of descriptors should be matched, if image needs to be similar

SURF also has two approaches, similar to CEDD

- Linear

- Flann

Linear is comparing the descriptors of all the image in linear fashion. FLANN is library which I mention in Part 3 of this series. I am using the Emgu CV implementation of Flann Index. Here, it takes descriptors of all the images, create a super matrix for it, and index this matrix with FLANN algorithms (Randomize K-D tree forest). When you perform the knn search, it find the approximate neighbors descriptors in matter of milliseconds. You can play around with both the implementation to understand the performance impact.

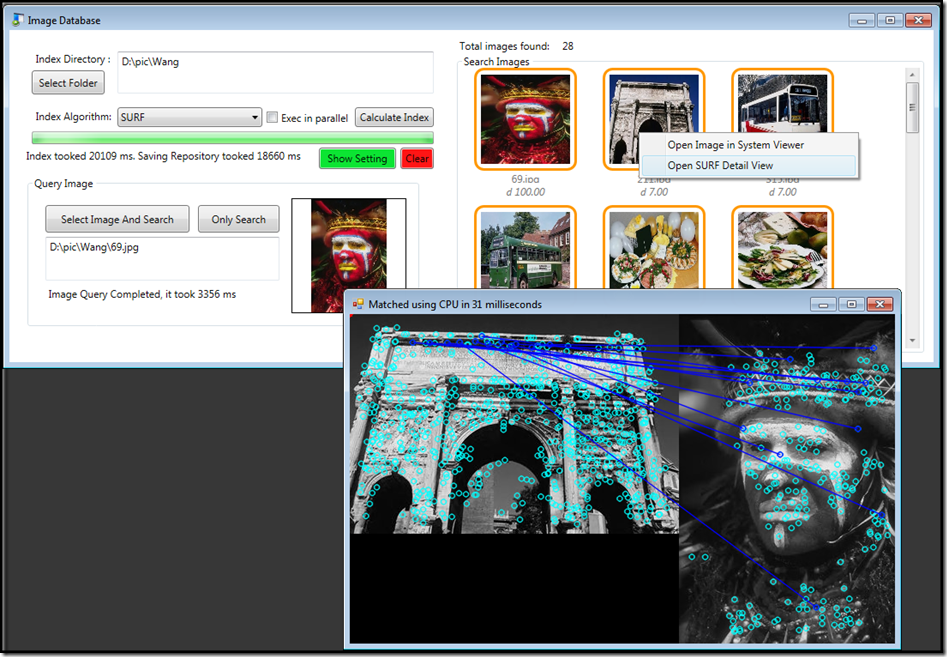

If you query, and need to check the what SURF has match, you can right-click query image, and see in the window, what key points has been match as shown below

Note: SURF implementation of OpenCV is patented. Royalty needs to be purchase before using it commercial application. Emgu CV library is under dual license, if you developing for commercial project, even EMGU CV license needs to purchased.

- Accord SURF

Here, I am using the Accord Framework version of SURF. Accord framework implementation is based on Open source implementation in the OpenSURF computer vision library by Christopher Evans (http://www.chrisevansdev.com). Used under the LGPL.

Here, concept remains the same, it first detects keypoints and then it calculate descriptors for those keypoints. Here, below is code sample, of how it looks

1: double hessianThresh = 500;

2: float hessianThreshold2 = (float)hessianThresh / 1000000;

3: //Open Surf Detector, hessian value in float i.e. 0.0002f

4: SpeededUpRobustFeaturesDetector surf = new SpeededUpRobustFeaturesDetector(hessianThreshold2);

5: using (Bitmap observerImage = (Bitmap)Image.FromFile(fi.FullName))

6: {

7: List<SpeededUpRobustFeaturePoint> observerImageSurfPoints = surf.ProcessImage(observerImage);

8: }

In this POC application, use can define Accord Surf Settings as following

Similar to EMGU CV Surf, In Accord SURF too, I had implemented two search algorithm. Linear search will compare each descriptors of all the images with query one to find similar image, while kd-tree, I am trying to built some kind of index these descriptors. I am using Accord-Framework kd-tree implementation.

In my experience, k-d tree wasn’t good for this implementation. kd-tree knn search performs poor in higher dimensions (more than 10), and here, I am having surf descriptor of 64 dimensions. I need some algorithm similar to FLANN, which does the approximate nearest neighbor search but couldn’t find such implementation in .NET. If anybody, who is reading this blog finds one, do let me know in post comments.

If you query, and need to check the what Accord SURF has match, you can right-click query image, and see in the window, what key points has been match as shown below

- LoCATe

LoCATe is algorithm, where it is finding SURF for keypoint detection, and using CEDD for compute local descriptors. This algorithm was create by Savvas A. Chatzichristofis, same guy, you built CEDD. Its available on his website http://chatzichristofis.info/?page_id=1479.

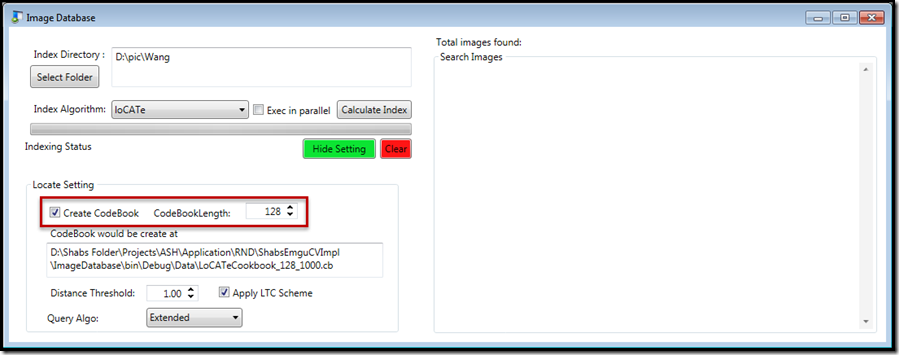

In order to use LoCATe, you need to following

If you are creating Index for first time, you can create the Codebook by ticking Create CodeBook checkbox. Below textbox, will display the path where codebook would be created.

If you are creating Index for subsequence time, you can select the Codebook previously create as reference for Indexing.

You would be wondering, that codebook, you need to follow my Part3, where I have explained the Bag of Visual Words algorithm. This is how LoCATe algorithm works

- It computes the descriptors of all the images in the index directory

- It takes 10% of the total descriptors compute on sampling bases for vector quantization

- Vector quantization is perform, by calculating the k-mean clustering algorithm found in Accord framework. Here, there is numeric updown textbox for Codebook length. This length is taken for number of cluster, which needs to be calculate. Normal, I take binary series number like 64, 128, 256, 512, etc.

- K-mean clustering finds the 128 (as in given example) mean cluster descriptors from the 10% sample descriptors. (This step takes lot of time, for 1000 images, it took 3 hrs to calculate the mean)

- I take these 128 mean descriptor and save it in the LocateCookBook_{codebook length}_{number of image}.cb file

- Then, I start calculating the inverted index of visual bag of words for each image from these mean descriptor.

- I store this inverted index in another file, which found the histogram index, which I will use to perform the query

Here, what happen, when the user search the image

- Computes the Locate descriptors for query image

- Taking codebook create earlier as reference, it will compute the inverted index of visual bag of words for query image

- If Apply LTC scheme checkbox is click, it will apply the query weighting scheme to both, inverted index of query image as well as all the images indexes.

- Base on the Euclidian distance, it finds the nearest match and display the result. It will return max 25 result. I have hard coded this value in code

- If ‘Extended’ query algo is selected, then it will perform further search of results return from bag of words search. It will run EmguCV SURF to find the exact or partial match, and weight the ranking base on the CEDD and LoCATe percentage score.

If you are not able to catch up with this article, I strongly advise you to google for “bag of visual bag of words” algorithm, and try to understand the same.

Point of interest here, inverted index of visual bag of words describes the image in histogram, what it is. In my experiment, LoCATe algorithm outperforms all the other algorithm mention above. It the best Algorithm, I could come up in my research. Extended give better result in term of partial match. Partial match is where query image in part of one of the actual image existing in the set of images for which search is perform.

On the whole, with this research, I found Computer Vision field to be interesting and work on CBIR would be improve. But, I ran out of time, and this research need to be stopped. If you are reading this, and if this article help you, or have any suggestion to improve it, please leave a comment. I would be happy to help you out, if my busy schedule permits 😦

What do you recommend the settings should be for Threshold, Uniqueness Threshold, and Good Match to get the best results but still index 1000’s of images fairly quickly?

LikeLike

It depends on the lots of factors, e.g. what are nature of images, are there have lots of color, is texture important to you or are they some sort of textual form. What’s are the image resolution? What is similar in your context? And still few more factors. I advise to play around with the numbers and see what work best for your set of images

LikeLike

Looks like they are planning to merge AForge.NET into Accord.NET hopefully soon. I hope they move over FLANN!

https://github.com/accord-net/framework/issues/70

LikeLike

Great Tutorial

LikeLike

thanks for this post. I learned a lot very fast what would take much time. Even the first question and answer hits that topic, but I don’t understand the way of property tuning. As for Threshold, Uniqueness Threshold, and Good Match – what is what doing for + und – setting.

Also ImageDatabase trys to load supermatrix.bin or observerFeatureSats.bin (missing) and throws an error. Should I save this file before – somehow?

LikeLike

Thanks for reading. It’s almost been two years when I last worked on this project. Can’t able to recollect much, but will try to answer your queries.

Supermatrix.bin or any *.bin are binary files which would be created when you index your list of images. You can do that by “Calculate Index” button on the form, plus “show setting” will let you play with parameters.

Threshold, Uniqueness Threshold, and Good Match are parameters used in calculating the SURF index. Playing with those will have an effect on speed on indexing, size of indexing and accuracy. Play with those to find optimise values need for your images set. In my case, I was developing for medical images containing microscopic cells figures, for you, image set could be different. You need to follow error and trial route for what best works for your image set. Hope, it helps.

LikeLike

Thanks a lot for your great effort.

LikeLike

Hi, it’s a nice article, i came to now lots of things in a single article. I am having some trouble can you please guide, while creating hash using CEDD algorithm, it generated memory out of bound exception.

Second thing is that i have near about 2 lakhs of images to search how can i implement it.

LikeLike

真的是太棒了,帮助我不少,看了您的文章,我收获颇多

LikeLike